Best Practices for Securing your HPC Cloud - Part III

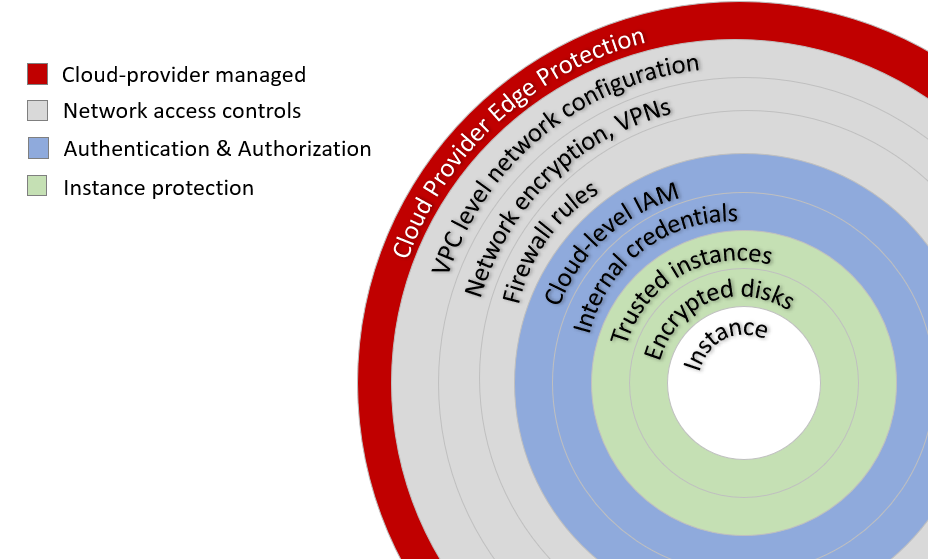

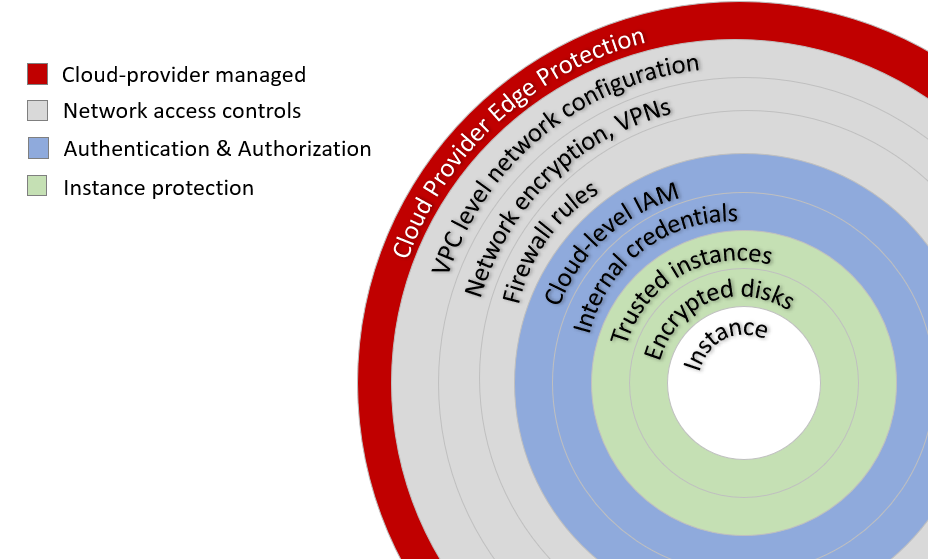

For HPC users considering a move to the cloud, security is an important topic. This is why many mobile application developers decide to go to websites similar to fraudwatchinternational.com/services/application-security for more information on how they can gain support with protecting mobile applications from external threats but these ideas can extend to other areas of computing as well. In the first article in this series, we began with the perimeter of our cloud security model covering cloud provider edge protection, VPCs, network security and firewall rules. In the second article, we explored identity and access management at the level of the cloud provider, deployed services, and individual machine instances. In this final article, we'll focus on additional measures to secure cloud instances and data including patching, trusted instances, encrypted storage, and other security-related tools like a VPN similar to NordVPN Linux. As with other articles in this series we discuss security in the context of Amazon Web Services (AWS) although the same concepts apply to other cloud providers as well.

Some administrators rely on security bulletins from the Computer Emergency Readiness Team (CERT) or commercial services like Red Hat Network (RHN) to alert them to security vulnerabilities. In Linux environments, patches are normally distributed as operating system updates managed using facilities like yum (yellow dog update manager) on RHEL and CENTOS, zypper on Suse, or Ubuntu's apt (Advanced Packaging Tool). Users on RHEL or CentOS might run commands yum check-update to see if new updates are available and yum update to apply any new packages. Some administrators prefer only to apply security-related patches to minimize changes to the environment and use filtering tools such as yum-plugin-security.

To reduce risk when applying patches, administrators often maintain a small test cluster that mirrors their production environment for purposes of testing updates before putting them in production. While tools like yum and apt are powerful, they are also complex. Administrators need to be knowledgeable about how to use them to apply patches safely, and rollback changes if something goes wrong. This is the case for any kind of system, be they the ones who monitor sip trunking providers or general server management.

To simplify patch management for cloud instances, administrators can use AWS Systems Manager which includes patch management functionality. The benefit of an automated patch manager is that it provides an easy-to-use interface across multiple OS flavors and users don't need to interact with lower-level OS facilities. While a patch manager makes it easy to apply and rollback patches, it doesn't guarantee that a new update won't affect application environments, so administrators still need to be concerned with change management.

Because of security-related concerns and the need for particular software, organizations often choose to deploy their own custom machine images rather than use machine images offered by the cloud provider. Instances deployed using these images are trusted because users know what software is in the image.

AWS provides facilities to create custom AMIs, share them among specific accounts, and even make AMIs public. When Altair Grid Engine is deployed via the AWS Marketplace, it is using a custom AMI behind the scenes containing a pre-configured OS and software provided by Altair . For customers automating the deployment clouds or hybrid clouds on AWS or other providers, a nice feature of Navops Launch is that it provides "bring your own AMI" functionality allowing users to automatically provision private or shared custom images that they trust in addition to machine images offered by the cloud provider.

There are a few different ways to increase your confidence in containers pulled from public registries. One technique is to pull containers from a commercial trusted registry from a vendor you trust (e.g., Altair, The UberCloud, or a Docker Trusted Registry). Images pulled from a Docker Trusted Register are digitally signed so users can be confident that they haven't been altered.

Another approach to deploying trusted containers is to set up a private container registry that contains only container images vetted by your organization. Cloud users can either deploy their own registries in the cloud or look to cloud services like Amazon's Elastic Container Registry (ECR) to manage registry services for them.

Amazon offers EBS encryption and S3 encryption, Microsoft offers storage service encryption across its various storage services, and Google supports encryption of data at rest by default in Google Cloud Platform. Cloud providers make encrypting storage simple, so this is another layer of defense that users can take advantage of. For those concerned about performance, Amazon claims that users can expect the same IOPS performance from encrypted volumes with minimal impact on latency.

For AWS users CloudTrail is a useful resource. CloudTrail records various actions and API calls made in your AWS account. It provides a complete log of events including sensitive activities like creating users, login profiles, adding users to groups, or modifying IAM roles or permissions. Users can use CloudTrail to automatically log AWS events to "trails" stored in AWS Simple Storage Service (S3) buckets, or pipe event logs to AWS Lambda where user-defined code can monitor events and take corrective action in case of unusual activities.

CloudTrail can also trigger the AWS Simple Notification Service (SNS) to send proactive push notifications, or pass information to the AWS Simple Queue Service (SQS) as another way of handling CloudTrail events. Users can also monitor CloudTrail events through AWS CloudWatch providing a single dashboard for monitoring AWS resource usage and security-related exceptions.

For example, the AWS Trusted Advisor service is available to all AWS users to validate S3 bucket permissions, check your security groups, and provide security related recommendations related to IAM, EBS services, RDS, verify multi-factor authentication (MFA) on log-in accounts. Customers with upgraded support plans can take advantage of a variety of additional automated checks, diagnostics, and receive proactive notification of security-related events. Microsoft's Azure Security Center and Google's new Cloud Security Command center provide similarly comprehensive security services.

In addition to the various facilities we've already mentioned, users can take advantage of additional services to help monitor and secure HPC applications in AWS. Among these additional services are:

Securing cloud instances

In cloud environments, protecting the machine instances that comprise the cluster is important. We've already discussed several layers of security including:- Configuring VPCs appropriately and providing paths to the internet or other private networks only when necessary

- Filtering protocols at the VPC level to prevent unauthorized traffic

- Locking down ports on the cloud instance using Security Groups

- Avoiding passwords and using SSH keys for instance-level loginWhile these measures go a long way toward making the environment secure, there are other measures we can take as well.

Keeping up with software patches

For administrators, patch management is a challenge that often doesn't get enough attention. Security-related vulnerabilities in Linux kernels and layered programs are being discovered constantly, and rootkits allow even unsophisticated users to exploit known security holes easily. When applying patches and software updates, administrators are always concerned about breaking things that are working, so administrators need to balance security risks with operational risks.Some administrators rely on security bulletins from the Computer Emergency Readiness Team (CERT) or commercial services like Red Hat Network (RHN) to alert them to security vulnerabilities. In Linux environments, patches are normally distributed as operating system updates managed using facilities like yum (yellow dog update manager) on RHEL and CENTOS, zypper on Suse, or Ubuntu's apt (Advanced Packaging Tool). Users on RHEL or CentOS might run commands yum check-update to see if new updates are available and yum update to apply any new packages. Some administrators prefer only to apply security-related patches to minimize changes to the environment and use filtering tools such as yum-plugin-security.

To reduce risk when applying patches, administrators often maintain a small test cluster that mirrors their production environment for purposes of testing updates before putting them in production. While tools like yum and apt are powerful, they are also complex. Administrators need to be knowledgeable about how to use them to apply patches safely, and rollback changes if something goes wrong. This is the case for any kind of system, be they the ones who monitor sip trunking providers or general server management.

To simplify patch management for cloud instances, administrators can use AWS Systems Manager which includes patch management functionality. The benefit of an automated patch manager is that it provides an easy-to-use interface across multiple OS flavors and users don't need to interact with lower-level OS facilities. While a patch manager makes it easy to apply and rollback patches, it doesn't guarantee that a new update won't affect application environments, so administrators still need to be concerned with change management.

Trusted instances

When a cloud service launches a machine instance, the instance is based on a machine image. In AWS the image is referred to as an AMI or Amazon Machine Image. Other cloud providers have the same concept. For example, Google Cloud Platform has the notion of public images and custom images. Azure provides facilities to create an image of a virtual machine or VHD. In the AWS marketplace, public images prepared by third parties can be used for a fee. For security-minded administrators, the question that always arises is "Can I trust this image?"Because of security-related concerns and the need for particular software, organizations often choose to deploy their own custom machine images rather than use machine images offered by the cloud provider. Instances deployed using these images are trusted because users know what software is in the image.

AWS provides facilities to create custom AMIs, share them among specific accounts, and even make AMIs public. When Altair Grid Engine is deployed via the AWS Marketplace, it is using a custom AMI behind the scenes containing a pre-configured OS and software provided by Altair . For customers automating the deployment clouds or hybrid clouds on AWS or other providers, a nice feature of Navops Launch is that it provides "bring your own AMI" functionality allowing users to automatically provision private or shared custom images that they trust in addition to machine images offered by the cloud provider.

Trusted containers

For customers using container environments like Docker or Singularity to deploy and manage HPC applications, the same issues apply. When users pull an image from a public registry, they are trusting that the container is secure, reasonably up to date and that it doesn't contain any malware, backdoors or known vulnerabilities.There are a few different ways to increase your confidence in containers pulled from public registries. One technique is to pull containers from a commercial trusted registry from a vendor you trust (e.g., Altair, The UberCloud, or a Docker Trusted Registry). Images pulled from a Docker Trusted Register are digitally signed so users can be confident that they haven't been altered.

Another approach to deploying trusted containers is to set up a private container registry that contains only container images vetted by your organization. Cloud users can either deploy their own registries in the cloud or look to cloud services like Amazon's Elastic Container Registry (ECR) to manage registry services for them.

Securing the container environment

In addition to making sure that containers are trusted, HPC users running container environments should be aware of other security-related considerations. When deploying containerized workloads, the container runtime will need to be installed on each cluster host. In Docker environments, the Docker daemon runs as root by default, leading to concerns about root escalation attacks where containerized workloads find a way to run commands as root compromising the security of the environment. While this concern about Docker has been addressed, it is important for administrators to configure Docker appropriately to make sure the environment is secure.Encrypting storage

For an extra measure of security, most cloud providers offer services to encrypt data at rest. Encryption services are supported for most storage types including block-storage, object storage, and various database services. When using encryption services for block storage, data inside the volume, snapshots created from the volume, and all data moving between the volume and the machine instance are encrypted. Encrypting storage provides an extra layer of security ensuring that your cloud storage is only readable by you. Losing your data can seem like a scary prospect. However, it is important to know that, if ever you find yourself in a position where you have lost important files, there are steps you can take to recover your data. For further information about data loss and recovery, go to SecureDataRecovery.com. In the majority of cases, your data can be restored. That being said it is still crucial that you protect your files.Amazon offers EBS encryption and S3 encryption, Microsoft offers storage service encryption across its various storage services, and Google supports encryption of data at rest by default in Google Cloud Platform. Cloud providers make encrypting storage simple, so this is another layer of defense that users can take advantage of. For those concerned about performance, Amazon claims that users can expect the same IOPS performance from encrypted volumes with minimal impact on latency.

Monitoring and Auditing

So far, we've been focusing on hardening the cloud environment in various ways to protect access to applications and data. As important as this is, it is equally important to monitor the environment so that you know whether people are attempting to breach your defenses and whether someone has been successful.For AWS users CloudTrail is a useful resource. CloudTrail records various actions and API calls made in your AWS account. It provides a complete log of events including sensitive activities like creating users, login profiles, adding users to groups, or modifying IAM roles or permissions. Users can use CloudTrail to automatically log AWS events to "trails" stored in AWS Simple Storage Service (S3) buckets, or pipe event logs to AWS Lambda where user-defined code can monitor events and take corrective action in case of unusual activities.

CloudTrail can also trigger the AWS Simple Notification Service (SNS) to send proactive push notifications, or pass information to the AWS Simple Queue Service (SQS) as another way of handling CloudTrail events. Users can also monitor CloudTrail events through AWS CloudWatch providing a single dashboard for monitoring AWS resource usage and security-related exceptions.

Other useful services

While there are security-related risks when running applications in the cloud, there are benefits as well. Cloud providers operate at a different scale than corporate data centers and are sophisticated in their understanding of security. Cloud providers offer a wealth of tools, best practices, and security-related expertise that is difficult for most enterprises to replicate.For example, the AWS Trusted Advisor service is available to all AWS users to validate S3 bucket permissions, check your security groups, and provide security related recommendations related to IAM, EBS services, RDS, verify multi-factor authentication (MFA) on log-in accounts. Customers with upgraded support plans can take advantage of a variety of additional automated checks, diagnostics, and receive proactive notification of security-related events. Microsoft's Azure Security Center and Google's new Cloud Security Command center provide similarly comprehensive security services.

In addition to the various facilities we've already mentioned, users can take advantage of additional services to help monitor and secure HPC applications in AWS. Among these additional services are:

- Amazon Inspector – A security assessment service that automatically assesses applications for vulnerabilities or deviation from best practices.

- AWS Config – A service that records and evaluates the configuration of your AWS resources so that you can track configuration changes over time.

- Vulnerability / Penetration testing services – A service whereby AWS or partners can be authorized to conduct various penetration tests against AWS hosted services, or simulate events such as disaster recovery scenarios to identify and correct vulnerabilities and provide confidence that the environment is secure.