The Excel Conundrum (Part 1): Why Most People Struggle with Data

When tasked with using data to solve a business problem, many of us struggle to get the answers we need. Far too often, the issue is not that we have shortcomings in our analytical skills, but rather that there are limitations in the tools we have at our disposal, or that these tools have special nuances to learn and we simply don’t have the time to learn them.

Do you fall into this category? Here are a few questions you can use to self-qualify:

Imagine you want to perform analysis that requires data from several different systems. Working in Excel to get data ready for deep analysis, reporting or summarization involves a series of tasks and activities that are too challenging, tedious and time-consuming for the average person.

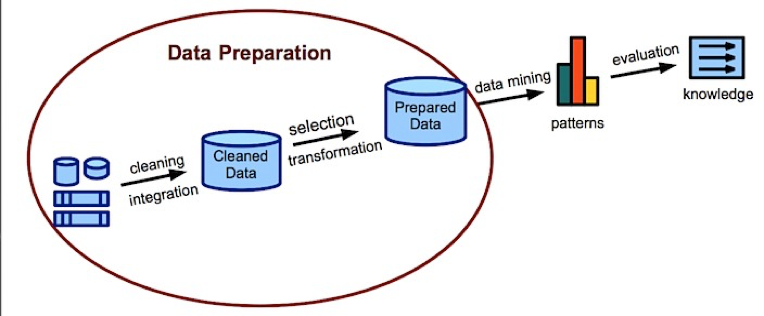

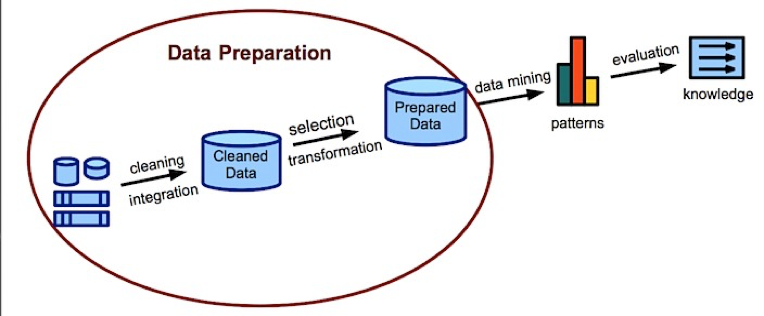

In fact, data analysts often spend up to 80% of their time correcting these issues with data preparation.

Image source

What if you no longer had to spend hours on YouTube tutorials or digging through an ‘Excel for Dummies’ book trying to learn code or formulas, find that special syntax or figure out that complex feature to achieve your data objectives, or reach out to your in-house Excel guru – again – for help yet again?

Most people don’t know there's a better way to automate data clean-up and manipulation so it will take minutes instead of hours. They’re not aware there are solutions to the day-to-day usability and data integrity issues they face with Excel.

Before we discuss the solution, in Part 2 of the blog series, let’s dig a little deeper into the realities of Excel that lead to wasted time and inaccurate data. So – what are the prime culprits?

However, it’s a manual process during which you’ll need to have multiple spreadsheets opened and are constantly switch views or scrolling all over to find the tables with the right data.

However, transferring data from PDF files can be a long process, as it has to be manually copied and formatted.

However, when you copy data from a website, the layout rarely transfers cleanly. As a result, you have a mess of hyperlinks, random ads, and missing information that’ll take hours just to clean up. Furthermore, wouldn’t it be nice if when the data updates on the web page, you automatically have that data without having to constantly go back to the site and fight through the same painstaking process all over again.

Not to mention, merging the data with existing charts can mean hours of work spent in reformatting.

Besides being time-consuming, these manual operations are error-prone and you could end up spending even more time tracking down and fixing mistakes. It can also be very difficult to get data from multiple sources to join cleanly (e.g. joining data from a big data source or database with a basic CSV file)

Amongst other things, people often forget that the data is only hidden—not completely removed—from the file and this could become a security risk. People are rushed and trying to do too many things at once, or have too many spreadsheets open, and mistakes happen.

Now that we’ve reviewed the difficulties with Excel that give the average person fits, keep an eye out for Part 2 next week where we’ll share the secret to tackling these problems.

To learn how Monarch helps you enhance Excel, check out Part 2 of this blog: The Excel Conundrum (Part 2): You Don't Need a Certification in Excel to Master Your Data.

Do you fall into this category? Here are a few questions you can use to self-qualify:

- Do you struggle to gather the data you need, rather than spending that time generating constructive insights that drive smart business decisions?

- Do you find yourself spending too much time collecting then filtering and parsing data?

- Do you spend hours trying to figure out how to tackle your data challenges, only to find that you need to code macros or build complex formulas just to get to the original task of performing analysis?

Why does this happen?

Data is inherently “dirty”, incomplete or not formatted in a way that can be used effectively. Dirty data could be caused by duplicate records, incomplete or outdated data, or the improper parsing of record fields from disparate systems. The first few times we have work with dirty data, we accept having to do a few ‘tweaks’ to get it to fit our needs. But soon those few tweaks explode into mind numbing manual efforts that derail the entire process.Imagine you want to perform analysis that requires data from several different systems. Working in Excel to get data ready for deep analysis, reporting or summarization involves a series of tasks and activities that are too challenging, tedious and time-consuming for the average person.

In fact, data analysts often spend up to 80% of their time correcting these issues with data preparation.

Image source

What if you no longer had to spend hours on YouTube tutorials or digging through an ‘Excel for Dummies’ book trying to learn code or formulas, find that special syntax or figure out that complex feature to achieve your data objectives, or reach out to your in-house Excel guru – again – for help yet again?

Most people don’t know there's a better way to automate data clean-up and manipulation so it will take minutes instead of hours. They’re not aware there are solutions to the day-to-day usability and data integrity issues they face with Excel.

Before we discuss the solution, in Part 2 of the blog series, let’s dig a little deeper into the realities of Excel that lead to wasted time and inaccurate data. So – what are the prime culprits?

Verifying Data

One of the most basic tasks we do with Excel is reviewing a data table to identify errors so we can avoid wasting time and effort in visualizing wrong or incomplete data.However, it’s a manual process during which you’ll need to have multiple spreadsheets opened and are constantly switch views or scrolling all over to find the tables with the right data.

Extracting Data From Locked Data Sources (for example, PDFs)

How many times have you come across the perfect chart or set of data – but the only copy you have is in a PDF? Most times, you have to copy charts and data from those documents and reports.However, transferring data from PDF files can be a long process, as it has to be manually copied and formatted.

Using Data From Live & Dynamic Websites

There’s a wealth of valuable data available on the web that can be used to enrich your data analysis. Such data is usually updated regularly, which means it’s precise and accurate. It’s faster and more reliable to go to a third party maintained website (such as a Financial institution for interest rates (..or whatever) to get accurate, timely, and precise information.However, when you copy data from a website, the layout rarely transfers cleanly. As a result, you have a mess of hyperlinks, random ads, and missing information that’ll take hours just to clean up. Furthermore, wouldn’t it be nice if when the data updates on the web page, you automatically have that data without having to constantly go back to the site and fight through the same painstaking process all over again.

Not to mention, merging the data with existing charts can mean hours of work spent in reformatting.

Combining Data Sets

Joining multiple data sets in Excel can involve a number of steps and complex maneuvers using VLOOKUPs, custom coding. and manual copy-and-pasting.Besides being time-consuming, these manual operations are error-prone and you could end up spending even more time tracking down and fixing mistakes. It can also be very difficult to get data from multiple sources to join cleanly (e.g. joining data from a big data source or database with a basic CSV file)

Fixing Missing Data

Don’t even get me started on deal with “nulls”. After importing data, you often must search for “nulls,” replace them with a value and repeat the process for each row and column in each data set, which is a highly manual and time-consuming process.Masking Sensitive Data

In order to comply with corporate or regulatory standards, you will need to mask your data using complex macros to protect sensitive data in your reports. You either need to spend time coding the macros or get someone else with the technical knowledge, and the time, to do it for you.Amongst other things, people often forget that the data is only hidden—not completely removed—from the file and this could become a security risk. People are rushed and trying to do too many things at once, or have too many spreadsheets open, and mistakes happen.

Consolidating Data Tables

When you need to combine rows from different spreadsheets, you have to manually copy-and-paste the information. This may be fine for a few dozen rows, but the process is tedious and time-consuming. It’s often impossible when the number of rows balloons into the hundreds or thousands.Version Control and History of Work

When you share your files and have multiple parties working on the data, Excel doesn’t allow you to track the activities or implement version control. This can lead to duplicative work, inaccurate data, and frustration.Reconciling Reports

Every time new data is changed in an Excel spreadsheet, you have to manually update reports or dashboards and BI tools that are pulling from this data. If you have a large data set, changes can be hard to identify.Repeating Data Prep For New Data

When new data comes in, you have to repeat all the data prep steps manually. If you want to have all your reports updated dynamically as soon as data is changed or added, you need to spend a lot of time creating complex macros to do the job.Now that we’ve reviewed the difficulties with Excel that give the average person fits, keep an eye out for Part 2 next week where we’ll share the secret to tackling these problems.

To learn how Monarch helps you enhance Excel, check out Part 2 of this blog: The Excel Conundrum (Part 2): You Don't Need a Certification in Excel to Master Your Data.