Integrating HPC and Machine Learning Workloads with Altair® PBS Professional® and Kubernetes

Because an ever-broadening range of requirements means workload orchestration isn’t one size fits all, we’ve integrated our industry-leading Altair® PBS Professional® workload manager and job scheduler with the popular Kubernetes (K8s) container orchestrator to give high-performance computing (HPC) users the best of both worlds — training machine learning (ML) solutions and submitting simulation jobs.

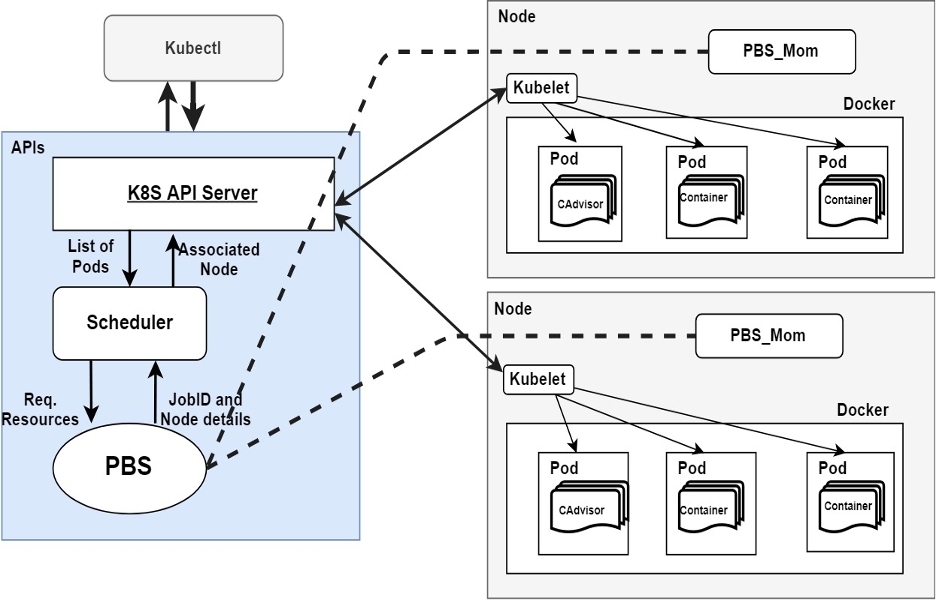

The integration of PBS Professional with Kubernetes involves PBS Professional scheduling and provisioning the Kubernetes container pod. This integration enables sites to run both HPC workloads and container workloads on the same HPC cluster without partitioning it into two separate portions. It also allows sites to take advantage of the sophisticated scheduling algorithms in PBS Professional and administer the cluster centrally using a single scheduler with a global set of policies. Kubernetes ships with a default scheduler but since that scheduler does not suit our needs, we use a custom scheduler which talks to the server, gets unscheduled pods, then talks to PBS Professional to schedule them. This custom scheduler has not been verified to run alongside the default Kubernetes scheduler. In theory, users can instruct Kubernetes which scheduler to use in the pod definition. This integration is achieved using PBS Professional hooks.

NOTE: The current integration assumes a namespace; requesting a custom namespace will result in failures or unknown results.

A hook is a block of Python code that PBS Professional executes at certain events; for example, when a job is queued. Each hook can accept (allow) or reject (prevent) the action that triggers it. A hook can make calls to functions external to PBS Professional.

About PBS Professional

Our enterprise-grade PBS Professional scheduler is well-known in the world of HPC, with scalability and scheduling policies that have evolved over nearly two decades. PBS Professional is designed to improve productivity, optimize utilization and efficiency, and simplify administration for HPC clusters, clouds, and supercomputers. It includes a range of features and capabilities including automated job scheduling, management, monitoring, and reporting, plus complex scheduling policies like fairshare, FCFS, a formula to sort jobs and nodes, backfilling, and strict ordering.

About Kubernetes

Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications. It’s designed to eliminate the burden of orchestrating physical, virtual compute, network, and storage infrastructure, and it has gained good traction in the deployment and training of ML models since its introduction in 2015.

Deploying Kubernetes and PBS Professional

Kubernetes provides APIs for users to write their own schedulers, but the time and effort required to do that can be avoided by using the PBS Professional connector and custom scheduler we have developed and made available on GitHub.com (https://github.com/PBSPro/kubernetes-pbspro-connector). The custom scheduler will run alongside the default PBS scheduler. PBS Professional’s scheduling policies have matured with years of industry usage, and Kubernetes can leverage these complex scheduling policies to run jobs using scheduling policies configured based on customer requirements.

Users can use qsub to submit jobs and use kubectl CLI to submit Kubernetes pods to the cluster.

Configuration and Setup

Before you begin deploying the integration, you will need to have already installed PBS Professional on your cluster. Kubernetes will need to be installed on the PBS server host. Lastly, the kubectl command is installed in the /bin directory on the PBS server host.

The deployment of the integration involves cloning the kubernetes-pbspro-connector repository from GitHub, installing the PBS Professional hook, updating the apiHost attribute, and compiling the custom scheduler.

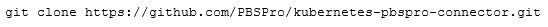

- Clone the Kubernetes PBS Professional Connector repository to the host.

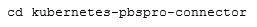

- Change directory to kubernetes-pbspro-connector folder.

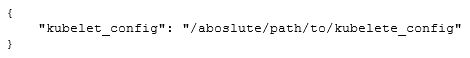

- Update pbs_kubernetes.CF with the absolute path to the kubelet config directory. The value to --config is the absolute path to the directory that the kubelet will watch for pod manifests to run. This directory will need to be created before starting the scheduler.

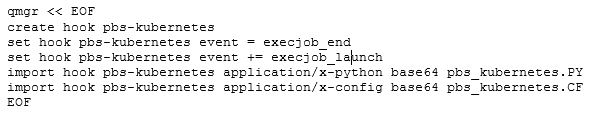

- Install the PBS Professional hook and config file.

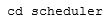

- Change directory to the scheduler folder.

- Update kubernetes.go by adding the value for apiHost, the location of apiproxy server. The example below uses the apiproxy server port 8001.

- Build the custom scheduler.

Start apiserver Proxy and Custom Scheduler

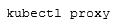

As root on the PBS server host, start apiserver proxy. We recommend starting apiserver proxy in a different terminal window as it will log information to the screen.

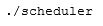

As root, start the custom scheduler (kubernetes-pbspro-connector/scheduler/scheduler). We recommend starting the scheduler in a different terminal window as it will log information to the screen.

You will see periodic messages to the screen logging the start and end of the scheduling iteration. In addition, it will log what job is scheduled.

User Experience and Validation

The Kubernetes user will use the same methods for creating the container and pod. Below is a simple example to assign a CPU and memory request and a CPU and memory limit to a container. A container is guaranteed to have as much memory as it requests but is not allowed to use more memory than its limit.

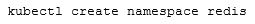

Create a Namespace

The user will create the namespace.

Specify CPU and Memory Requests

To specify a CPU and memory request for a container, include the resources:requests field in the container's resource manifest. To specify a CPU and memory limit, include resources:limits.

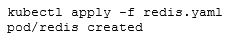

Create and Apply the Pod

The user will apply the resource manifest to the namespace and the container pod will be deployed.

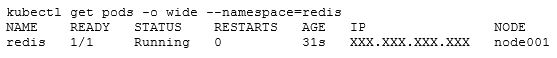

Verify the Container Pod Is Running

The container pod would have been deployed to the node and registered with PBS Professional using the same resource requests. The redis pod should be in running state:

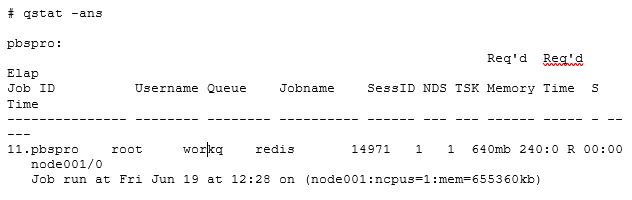

The PBS Professional job status will show a job running, whose name matches the name of the pod.

Terminate the Container Pod

The user will terminate the container pod in the same manner they are accustomed to executing.

![]()

Benefits for HPC and ML Workloads

Sites running both HPC and machine learning workloads will benefit from running Kubernetes and PBS Professional in tandem. Not only can Kubernetes leverage the scalability of PBS Professional, but PBS Professional can leverage the orchestration of containers within Kubernetes and help boost Kubernetes scheduling functions. It helps effectively dispatch the pods on the best host, resulting in consolidation and the convergence of environments where clusters are not operating as independent silos and instead resources can be reused — a big efficiency boost.