Credit Scoring Series Part Nine: Scorecard Implementation, Deployment, Production, and Monitoring

The real benefit of a scorecard or a credit strategy is only evident upon implementation. The final stage of the CRISP-DM framework – implementation – represents transition from the data science domain to the information technology (IT) domain. Consequently, the roles of responsibilities also change from data scientists and business analysts to system and database administrators and testers.

Prior to scorecard implement, teams must make several technology decisions. These decisions cover data availability (the choice of which hardware and software is used), who has responsibility for scorecard implementation (who’s responsible for scorecard maintenance); and whether production is performed in-house or outsourced.

Scorecard implementation is a sequential process that’s initiated once the business has signed off on the scorecard model. The process starts by generating a scorecard deployment code, leading to pre-production, production, and post-production.

Figure 1. Scorecard Implementation Stages

Deployment Code

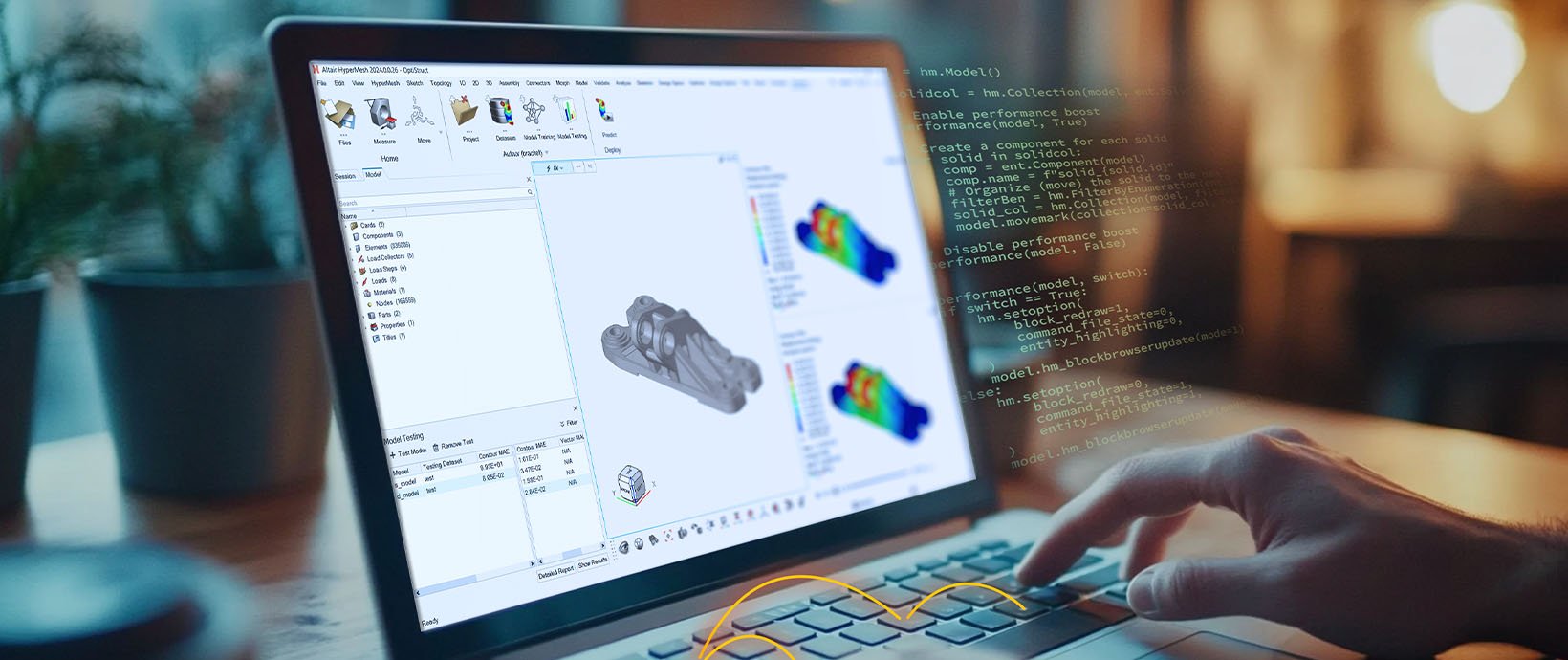

Deployment code is created by translating a conceptual model, such as a model equation or a tabular form of a scorecard into an equivalent software artefact ready to run on a server. The implementation platform on which the model will run identifies the deployment language and could be, for example, the SAS language (Figure 2), SQL, PMML, or C++. Writing model deployment code can be error-prone, and often represents a bottleneck as a number of code-refinement cycles are necessary to produce the deployment code. Some analytical vendors offer automatic code deployment capability in their software – a desirable feature that produces error-free code, shortening the deployment time and the code testing cycle.

Figure 2. Automatic generation of SAS language deployment code with World Programming software

Scorecard implementation, whether on a pre-production server for testing or a production server for real-time scoring, requires an API wrapper that’s placed around the model deployment code to handle remote model scoring requests. Model inputs, provided from internal and external data sources, can be extracted either outside or inside the scoring engine. The former runs variable extraction outside the scoring engine and passes the variables as parameters of an API request. The latter, as depicted in Figure 3, runs a pre-processing code inside the scoring engine and carries out variable extraction and model scoring on the same engine.

Figure 3. Real Time Scoring using API Call

Pre-Production and Production

Pre-production is an environment used to run a range of tests before committing the model to the (live) production environment. These tests would typically be model evaluation and validity tests, system tests that measure the request and response time under anticipated peak load, or installation and system configuration tests.

Thoroughly tested and approved models are uploaded to the final destination, the production environment. Models running on a production server can be in active or passive state. Active models are champion models whose scores are utilized in the decision-making process in real-time as either credit approval or rejection. Passive models are typically model challenges not yet utilized in the decision-making process, but whose scores are recorded and analyzed over a period to justify their business value prior to becoming active models.

Monitoring

Every model degrades over time as the result of natural model evolution influenced by many factors, including new product launches, marketing incentives, or economic drift, which is why model monitoring is imperative to prevent negative business effects.

Model monitoring is post-implementation testing used to determine if models continue to be in line with expected performance. IT infrastructure needs to be set up in advance to enable monitoring by facilitating model report generation, a repository for storing reports, and a monitoring dashboard.

Figure 4. Model Monitoring Process

Model reports can be used to, for example, identify if the characteristics of new applicants change over time, establish if the score cut-off value needs to be changed to adjust acceptance or default rate, or determine if the scorecard ranks the customer in the same way as it ranked the modeling population across different risk bands.

Scorecard degradation is typically captured using pre-defined threshold values. Depending on the magnitude of change, a relevant course of action is taken. For example, teams can ignore minor changes in scorecard performance, but moderate changes might require more frequent monitoring or scorecard recalibration. Any major change might require teams to rebuild the model or transition to the best alternate model.

Credit-risk departments have access to an extensive array of reports, including a variety of drift reports, performance reports, and portfolio analysis (Table 1). Examples of the two most typical reports are population stability and performance tracking. Population stability measures the change in distribution of credit scores in the population over time. The stability report generates an index that indicates the magnitude of change in customer behavior as result of population changes. Any significant shift would set up an alert requesting the model redesign. A performance tracking report is a back-end report that requires a sufficient time for customer accounts to mature so the customer performance can be assessed. Its purpose is twofold – first, it tests the scorecard’s power by seeing if it’s still able to rank the customers by risk; then, it tests the accuracy by comparing the expected default rates known at the time of modeling with current default rates.

| Report type | Report name |

|---|---|

| Drift reports | Population stability |

| Population stability | |

| Characteristic analysis | |

| Performance reports | Performance tracking |

| Vintage analysis | |

| Portfolio analysis | Delinquency distribution |

| Transition matrix |

Table 1. Scorecard Monitoring Reports

The challenge with model monitoring is the prolonged time difference between the change request and its implementation. Complexity of tasks to facilitate monitoring process for every model running in the production environment (Figure 1), including code

to generate reports, access to the relevant data sources, model management, report schedulers, model degradation alerts and visualization of the reports create a demanding process. This has been the main motivation for lenders to either outsource

model monitoring capability or invest in an automated process that facilitates model monitoring with minimal human effort.

Conclusion

Credit scoring is a dynamic, flexible, and powerful tool for lenders, but there are plenty of ins and outs that are worth covering in detail. To learn more about credit scoring and credit risk mitigation techniques, read the next installment of our credit

scoring series.

Read prior Credit Scoring Series installments:

- Part One: Introduction to Credit Scoring

- Part Two: Scorecard Modeling Methodology

- Part Three: Data Preparation and Exploratory Data Analysis

- Part Four: Variable Selection

- Part Five: Credit Scorecard Development

- Part Six: Segmentation and Reject Inference

- Part Seven: Additional Credit Risk Modeling Considerations

- Part Eight: Credit Risk Strategies