Optimizing GPU Workloads for AI and Machine Learning

In today’s data centers and in the cloud, GPUs have become a staple of accelerated high-performance computing (HPC), especially for artificial intelligence (AI) and machine learning (ML) workloads. The drive for faster, more efficient compute and optimized input/output (I/O) — and ultimately better time-to-results and ROI — demands GPU-capable tools, including workload management and job scheduling software.

AI Leadership With NVIDIA DGX Systems

In AI data centers, managing distributed GPU-powered ML frameworks is a central challenge. Data scientists run diverse workloads ranging from data preparation and model training to model validation and inference. Workloads need to run quickly, use resources efficiently, and be deployed considering factors such as CPU and GPU architecture, memory, cache, bus topologies, and interconnect and network switch topologies.

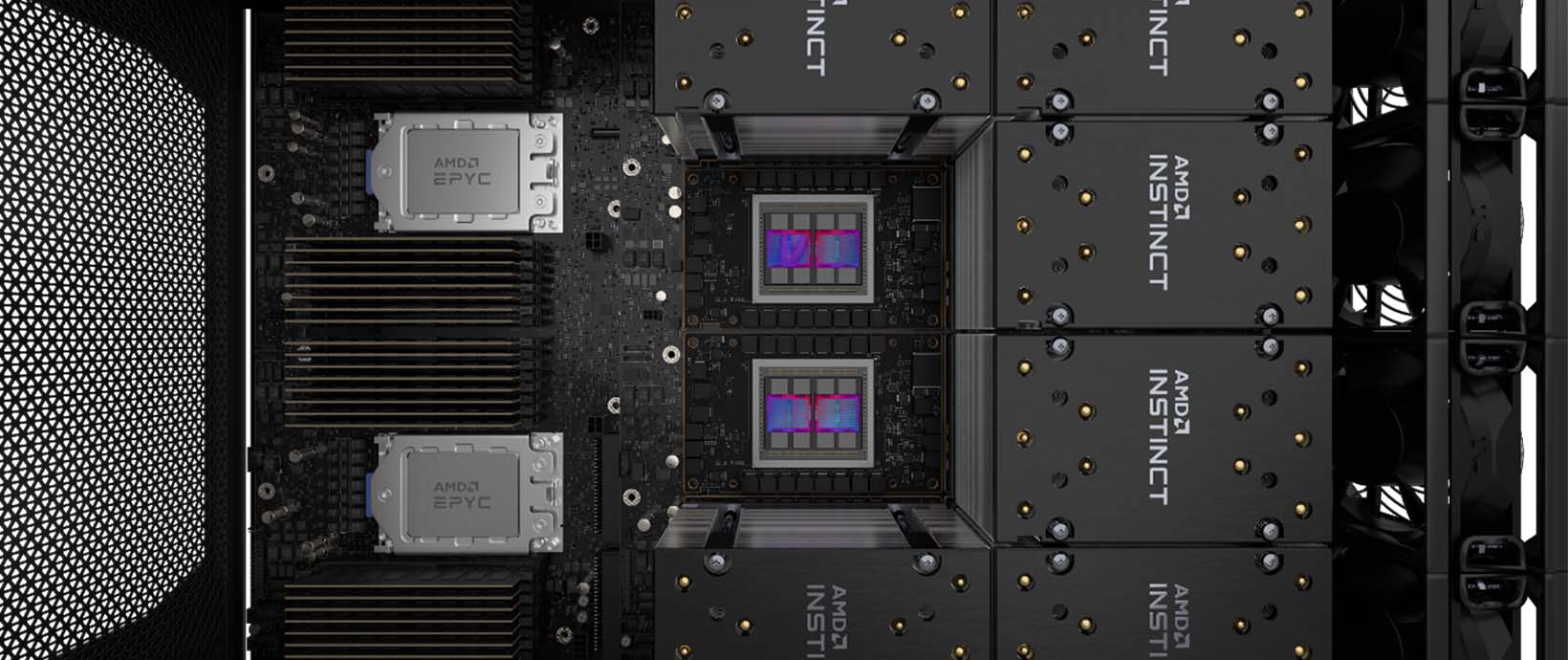

NVIDIA DGX™ systems are purpose-built for deep learning applications. This family of products includes the NVIDIA DGX A100 and NVIDIA DGX Station™ A100 systems. Organizations across industries use NVIDIA DGX to power their AI initiatives and change the world. In fact, nine of the top ten U.S. government institutions, eight of the top ten U.S. national universities, seven of the top ten U.S. hospitals, and seven of the top ten global car manufacturers all use NVIDIA DGX technology.

Workload Management on NVIDIA GPU Architecture

Altair workload management and job scheduling tools, including Altair® PBS Professional® and Altair® Grid Engine®, are optimized for performance in GPU environments such as NVIDIA DGX systems. PBS Professional version 2021.1.1 includes support not only for scheduling workloads on multiple GPU servers and multi-node GPU servers for increased throughput and parallel processing, but also multi-instance GPU (MIG) when workloads do not fully saturate a GPU’s compute capacity. MIG allows GPUs to be securely partitioned into up to seven separate instances for CUDA® applications.

PBS Professional treats GPUs and GPU instances like a consumable resource (first-class resource) and allows users to request them in integer units (for example, 1, 2, 3, etc.). PBS Professional can automatically detect the GPUs/GPU instances on an NVIDIA DGX system and isolate them for the job. PBS Professional schedules and allocates each GPU instance equally, regardless of instance size. It also provides the same level of GPU scheduling and isolation for container jobs using Singularity and Docker.

Altair Grid Engine provides rich support for scheduling GPU-aware applications and containers. It delivers efficient workload and resource management capabilities for NVIDIA DGX environments ranging from a single DGX system to a cluster of thousands of GPUs. Altair Grid Engine version 8.6.0 and later are integrated with the NVIDIA Data Center GPU Manager, which provides detailed information about GPU resources.

With this integration, Altair Grid Engine has full visibility into GPUs on each host, including GPU type and version; available memory; operating temperature; and socket, core, and thread affinity. This information helps Altair Grid Engine schedule GPU-aware applications more efficiently to optimize both performance and resource use.

Altair Grid Engine has built-in support for Docker and the NVIDIA Container Toolkit, allowing users to manage containerized GPU workloads just like managing any Grid Engine job.

By using Altair workload management solutions to manage GPU workloads on NVIDIA DGX systems, organizations can boost performance, use resources more efficiently, and improve overall productivity. Both PBS Professional and Altair Grid Engine effectively support NVIDIA GPUs with customers in HPC and EDA. PBS Professional has long been the solution of choice for compute-intensive industries including manufacturing and automotive design, and Altair Grid Engine supports GPU-enabled and distributed computing for many healthcare and life sciences providers.

The Future of GPU-enabled HPC for AI

The race to stay ahead of the AI wave is on. NVIDIA has distilled the knowledge from field-proven AI deployments around the globe and has built NVIDIA DGX systems with replicable, validated designs that every enterprise can benefit from.

The NVIDIA ecosystem of proven enterprise-grade software, including Altair workload management tools, is fully tested and certified for use on NVIDIA DGX systems, simplifying the deployment, management, and scaling of AI infrastructure.

- Chris Porter, senior technical marketing manager, NVIDIA