Altair One & NVIDIA GPUs on Azure: Accelerating CAE Simulation

Computer-aided engineering (CAE) is essential to modern manufacturing. As designs become more sophisticated, engineers must simulate larger assemblies, apply finer meshes, and account for complex physics interactions, including computational fluid dynamics (CFD), structural mechanics, heat transfer, and electromagnetics (EM).

In June 2025, engineers from Altair and Microsoft collaborated on one of our most ambitious benchmarks to date, running 20 tests across 14 models using Altair GPU-enabled solvers on Microsoft Azure.

In this article, we explain the critical role of GPUs in CAE, showcase the benefits of running simulations on the latest Azure GPU-accelerated instances, and explain how design and engineering teams can get started with Altair One®, Altair’s cloud innovation gateway. With Altair One, engineers can collaborate more effectively and easily launch and share complete design, simulation, and analysis environments in the Azure cloud.

GPUs and simulation

In the early 2010s, the use of GPUs for CAE was considered niche and experimental. Thanks to dramatic improvements in floating-point performance, larger memories supporting larger models, and faster interconnects, GPUs have become mainstream in engineering simulation.

Today, GPU-native solvers feature algorithms specifically designed to leverage the massive parallelism of modern GPUs. These solvers automatically partition models based on the number of available GPUs, reducing setup effort and ensuring that simulations are balanced to achieve the highest possible throughput.

While performance can vary widely depending on the model and simulation, large CFD models can run anywhere from 10 to 18 times faster on GPUs compared to CPUs alone.1 Altair offers both GPU-native and GPU-accelerated solvers for multiple physics domains, including CFD, thermal analysis, structural analysis, and EM.

Azure GPU-accelerated VMs

Running simulations on Azure offers many benefits for manufacturers, both large and small. By operating in the cloud, engineers can easily access state-of-the-art hardware and run simulations at scale without worrying about on-premises infrastructure. Design and engineering teams enjoy:

- On-demand access to the latest CPUs and GPUs

- Faster deployment times for compute and storage

- Seamless scaling to address changing business needs

- Predictable pricing with flexible, consumption-based cost models

Microsoft offers multiple GPU-accelerated VMs that are ideal for CAE, including the HPC-optimized ND-H100-v5 and ND-H200-v5 series VMs, which feature eight NVIDIA® H100 SXM™ Tensor Core and NVIDIA® H200 SXM™ Tensor Core GPUs, respectively.

The Standard_ND96isr_H100_v5 VM provides 96 vCPUs, 1,900 GB of system memory, and 8 x NVIDIA H100 Tensor Core GPUs (SXM form factor), each with 80 GB of HBM3 memory having a bandwidth of 3.35 TB/s. The Standard_ND96isr_H200_v5 offers similar VM-level specs but is powered by eight NVIDIA H200 GPUs, each with 141 GB of HBM3e memory and features 4.8 TB/s of memory bandwidth for optimal performance.

About the benchmarks

To illustrate the performance and scalability of GPU-enabled solvers on Azure, engineers from Microsoft and Altair ran a series of 20 tests using three solvers accessible through Altair One, as illustrated below. Each model was run on Standard_ND96isr_H100_v5 and Standard_ND96isr_H200_v5 instances, varying the number of GPUs. Some models were run multiple times, reflecting different numbers of timesteps and spatial resolutions.2

- The first tests involved Altair® ultraFluidX®, a GPU-native solver that uses the Lattice-Boltzmann Method (LBM) for the rapid prediction of aerodynamic properties.

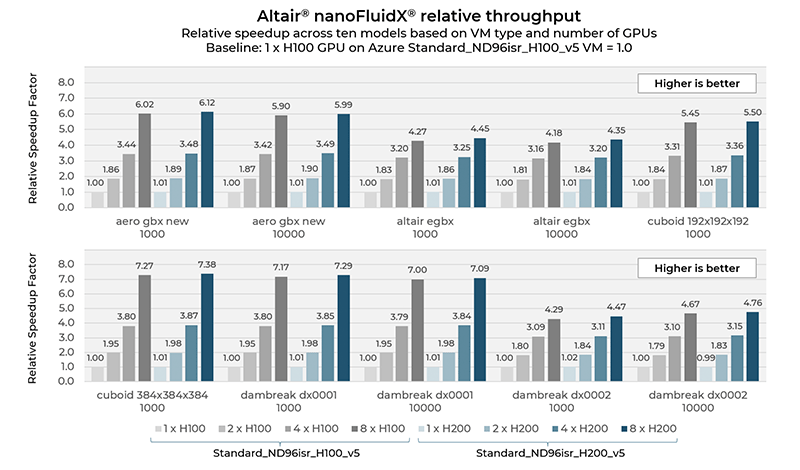

- In a second set of tests, the team ran Altair® nanoFluidX®, a GPU-based smooth-particle hydrodynamic (SPH) solver used to predict fluid flow behavior around complex moving geometries, conducting 11 benchmarks using five different models.

- A final set of tests involved Altair® EDEM™, a solver based on the discrete element method (DEM), to simulate bulk and granular materials handling using seven standard Altair models.

Breakthrough performance in the Azure cloud

ultraFluidX results

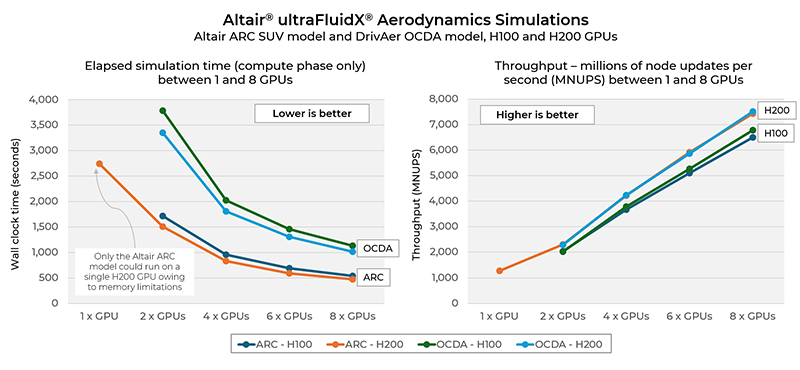

The ultraFluidX benchmarks simulated external aerodynamics for two reference geometries:

- Altair’s ARC SUV model simulated over 0.05 seconds

- The Ford Open Cooling DrivAer (OCDA) model simulated over 0.19 seconds.3

These benchmarks highlight the performance benefits of scaling ultraFluidX from a single GPU to an eight-GPU configuration on Azure ND v5 VMs.

For the ARC model, elapsed time for the computational phase decreased from 2,744 seconds (45m 44s) on a single H200 GPU to just 470 seconds (7m 50s) on eight H200 GPUs. This represents a 5.83x speedup, corresponding to 73% strong scaling efficiency, and underscores the excellent multi-GPU scalability of ultraFluidX.4

It was not possible to run the 118 GB ARC model on a single H100 GPU, as the model exceeded the H100’s 80 GB memory capacity. Similarly, the OCDA model could not be run on a single H100 or H200 GPU due to its memory footprint.

Using all eight GPUs, the Standard_ND96isr_H200_v5 VM provided a 1.14x speedup relative to eight GPUs on the Standard_ND96isr_H100_v5 VM, likely owing to the higher HBM3e memory bandwidth on the NVIDIA H200 (4.8 TB/s vs. 3.35 TB/s).5

nanoFluidX results

A set of ten nanoFluidX benchmark cases was executed on Microsoft Azure Standard_ND96isr_H100_v5 and Standard_ND96isr_H200_v5 virtual machines, scaling from one to eight GPUs.

Across ten test cases, moving from one H100 GPU to eight H200 GPUs on the Azure ND v5 instances delivered an average 5.74x speedup, equivalent to 72% strong scaling efficiency. This substantial reduction in turnaround time enables engineering teams to explore more design alternatives within tight development timelines.

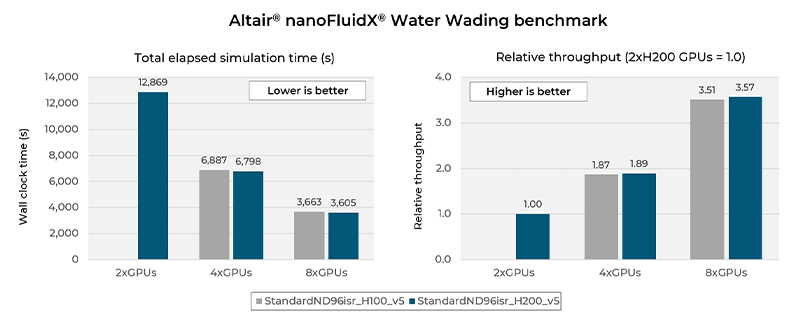

Additionally, a production-scale "water wading" simulation was conducted on both instances. This test simulated an Altair CX-1 vehicle moving at 10 km/h through a 24-meter-long water channel over a 15-second physical time interval.

Due to the memory demands of the water wading model, the minimum viable configuration was two H200 GPUs. Running the model on eight H200 GPUs reduced the total runtime from 12,869 seconds (3h 34m 29s) to 3,605 seconds (1h 0m 5s), representing a 3.57x throughput gain.

EDEM results

Altair EDEM benefits enormously from GPU-based acceleration. In prior Altair benchmarks involving 2 x NVIDIA A100 GPUs and the same models tested here, performance improvements ranged from 26.8x faster to 187.1x faster compared to a 32-core CPU.6

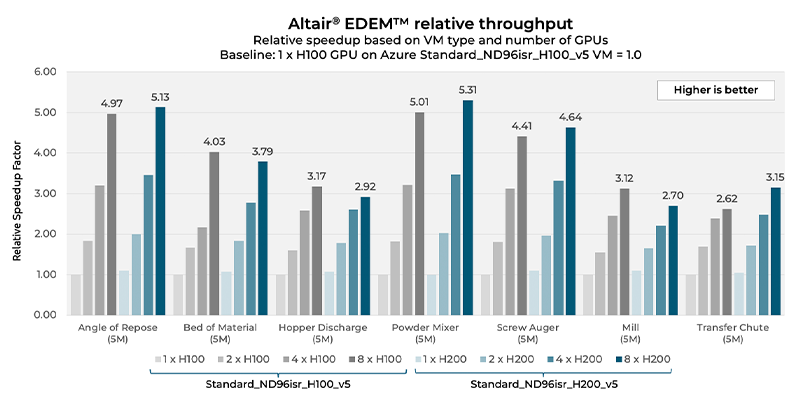

The EDEM benchmarks were run on both the Standard_ND96isr_H100_v5 and Standard_ND96isr_H200_v5 VMs for each model with one, two, four, and eight GPUs. Scalability varied depending on the model. Running with all eight GPUs active on the Standard_ND96isr_H100_v5 VM resulted in an average 3.90x speedup relative to a single H100 GPU across the tested models. A slightly higher average uplift of 3.95x speedup was observed when using the Standard_ND96isr_H200_v5 VM. The best result was achieved with the Powder Mixer model, where the 8 x H200 GPU configuration ran 5.31x faster than a single H100 GPU, reducing the runtime from approximately 45 minutes to 8 minutes, demonstrating a significant productivity improvement.

Like the other solvers tested, with EDEM, the larger memory and higher memory bandwidth of the Standard_ND96isr_H200_v5 VM delivered slightly better throughput for all test cases compared to the H100 VM. The best choice will depend on whether models fit in memory, instance pricing, and instance availability.

The bottom line

Running GPU-accelerated Altair One simulations on Microsoft Azure can help deliver both breakthrough performance and outstanding business agility, sometimes replacing an entire cluster with a single GPU-powered cloud instance. Manufacturers can realize multiple benefits, including:

- Superior time-to-solution — Across 20 Altair CFD™ and Altair EDEM test cases, GPU-accelerated Azure VMs slashed runtimes by up to 5-6x versus a single GPU. Highly granular EDEM particle simulations achieved a relative speedup of up to 5.31x across eight H200 GPUs, compressing simulation runtimes from hours to minutes.

- Right-sized economics — Microsoft Azure ND-H100-v5 series VMs provide outstanding throughput and value for most simulation workloads. The additional memory and bandwidth offered by the ND-H200-v5 series VMs provide engineers with a performance boost and headroom for oversized meshes and AI workloads.

- Effortless scale, management, and governance — Altair One and Microsoft Azure abstract away the complexity of HPC. With Altair One appliance templates, engineers can provision Azure cloud infrastructure and launch jobs in just minutes, while IT retains full visibility into spending and resource governance.

Getting started in the Azure Cloud

Altair One is a revolutionary cloud service for collaborative engineering built on the Azure Cloud that provides a robust and high-performance computing backbone for simulation.7 The Altair One portal allows access to the solvers described above, along with hundreds of design, engineering, and analytic tools.

With a simple appliance-based deployment model, Altair One automatically deploys and manages critical software components on Azure, including software tools, workload managers, remote access solutions, and prerequisite middleware and libraries, allowing engineers to focus on their work.

Register for an Altair One account by visiting admin.altairone.com/register.

If you don’t already have a Microsoft Azure account, you can obtain one by visiting azure.microsoft.com/en-us/pricing/purchase-options/azure-account.

1 Based on a comparison made by Altair simulating a DCT gearbox case using a smooth-particle hydrodynamics (SPH) solver, comparing servers powered by 32-core Intel® Xeon® processors to a server comprised of 4 x NVIDIA Tesla V100 GPUs. Details are provided in the Altair® nanoFluidX® documentation.

2 The aero_gbx and Altair e-gbx models were run with 1,000 and 10,000 timesteps. The Altair simple cube model (cuboid) was run with spatial resolutions of 192x192x192 (7M particles) and 384x384x384 (57M particles). The dambreak model was run with both timesteps of 1,000 and 10,000 and spatial resolutions (dx) of 0.1mm and 0.2mm.

3 The aerodynamics simulations were run just long enough to provide a reliable average throughput. In a production simulation, runtimes would typically be 20x longer or more.

4 The throughput for the ARC model on a single H200 GPU during the simulation’s compute phase was 1,273.49 million node updates per second (MNUPS). Eight H200 GPUs delivered a throughput of 7,421.08 MNUPS, a 5.83x improvement. 5.83x represents 73% of the maximum theoretical 8x speedup achievable with 8 GPUs (5.83/8).

5 See NVIDIA H100 SXM specifications and NVIDIA H200 SXM specifications.

6 The Screw Auger model ran 26.8x faster on 2 x NVIDIA A100 GPUs compared to a 32-core CPU. The Bed of Material model ran 187.1x faster. Details are available at community.altair.com.

7 Microsoft Azure is Altair One's default cloud platform. Users can also run workloads on local servers or clusters or deploy simulations to Azure and third-party clouds by providing appropriate credentials.